Behavioral Risks (Red-Teaming) in Mend AI

Note: This feature is only available with Mend AI Premium.

Contact your Customer Success Manager at Mend.io for more details about Mend AI Premium.

Overview

Red Teaming is essentially prompt-based adversarial testing. It’s a practice where attacks on an organization's AI systems are simulated, to identify weaknesses.

Mend AI provides a single-pane-of-glass experience with dynamic AI Insights being displayed within the Mend AppSec Platform, enabling users to conduct red teaming and dynamic security tests specifically tailored for AI applications. It enhances the ability to identify, analyze, and mitigate vulnerabilities in AI systems, improving efficiency, usability, and overall security posture.

Prerequisites

The following IPs need to be enabled in the firewall for detection of Behavioral Risks in Mend AI:

Getting it done

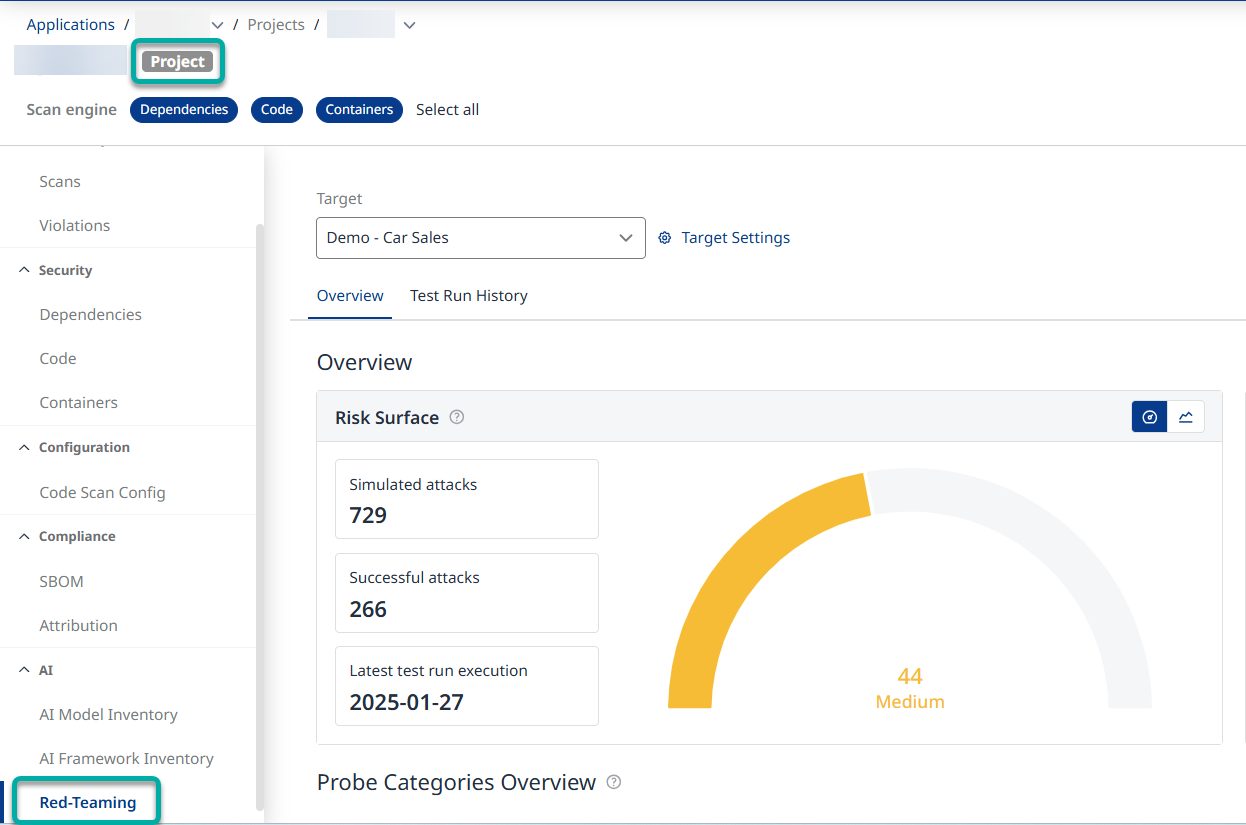

Red-Teaming is available in the Project context. Once the relevant project is accessed, make sure to select Red-Teaming from the left-pane menu:

Please use the table of contents below to navigate the Red-Teaming documentation:

Probe Catalog

- Context Leakage

- Fake News

- Jailbreak

- RAG Poisoning

- Bias

- Competitor Check

- Harmful Content

- Intentional Misuse

- Off Topic

- Profanity

- Paranoid Protection

- RAG Precision

- URL Check

- Q&A

- Data Exfiltration

- Manipulation

- Phishing

- PII

- Legally Binding

- Web Injection

- Cyber Threats

- Privacy Violation

- Illegal Activities

- Fraudulent Activities

- Code Execution

- Custom Probe

- Custom Datasets Probe