Red Teaming Integrations

Overview

This page provides the information about every available integration between Probe and Target.

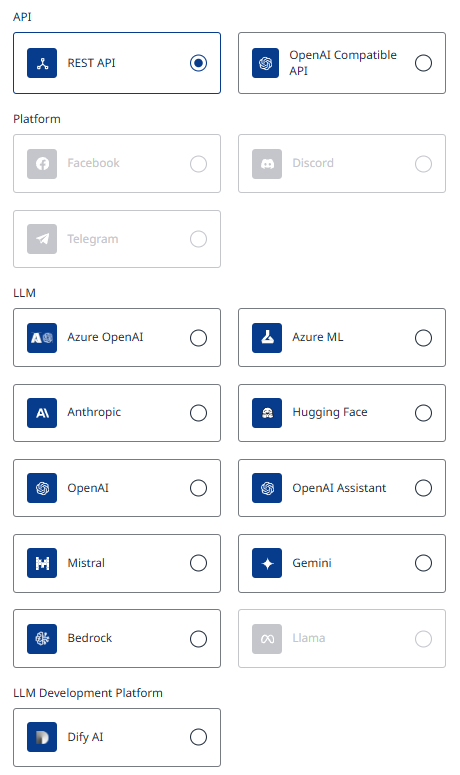

The list is visible in the Mend AppSec Platform under AI Behavioral Risk (Red-Teaming) → Add Target.

API Integrations

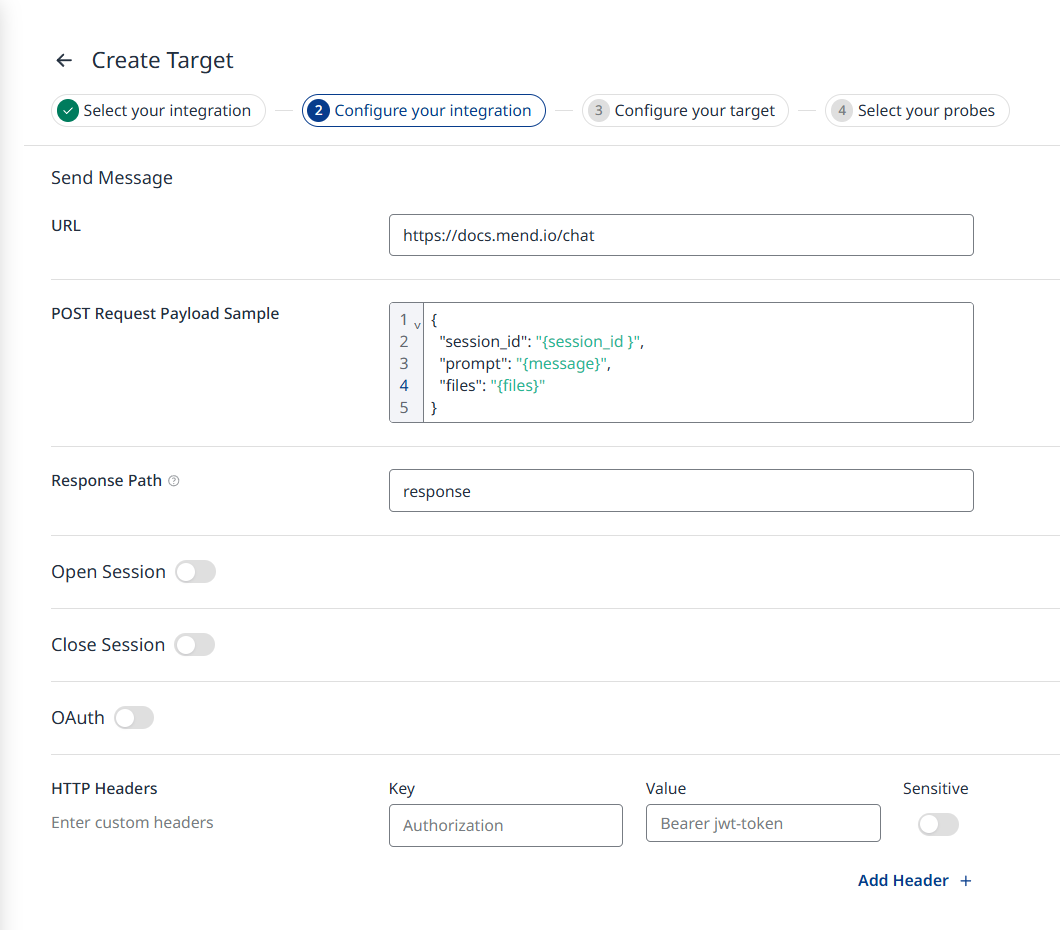

REST API

Simple REST API Configuration example (without headers)

URL - This is your target endpoint to which the attack messages will be sent.

POST Request Payload Sample - Here, you provide the payload (body of the HTTP request). Once provided, the payload should include the following placeholders, which you need to insert:

{message} - This placeholder represents where the Probe will insert attack messages, simulating input from a user interacting with your application.

{session_id} - This placeholder marks the location where a unique string, identifying the current conversation session, will be placed. This ensures that the request is tied to a specific session for multi-step testing.

The payload can contain additional fixed arguments if needed.

Response Path - The JSON path pointing to the message within your chatbot's API response to the given request.

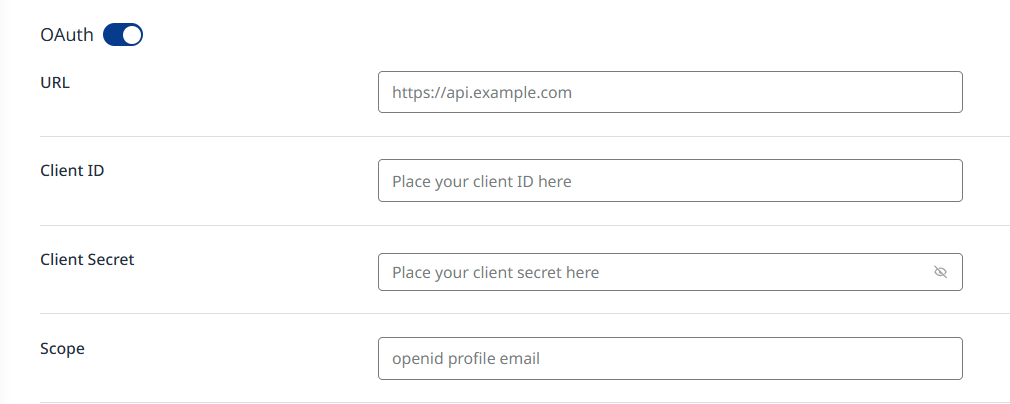

OAuth - OAuth support for REST API connection is available to allow a third-party authentication without sharing the user's credentials (like username and password). To enable this method of authorization, provide the following fields:

URL - The endpoint of the OAuth authentication server (typically the token endpoint) used to request the access token (e.g.

https://auth.example.com/oauth/token).Client ID - A unique identifier for the client application, provided by the OAuth server during app registration.

Client Secret - A confidential key used by the application to authenticate itself to the OAuth server, used in combination with the Client ID.

Scope - A space-separated list of permissions that the application is requesting, defining the level of access to protected resources.

These parameters are required to successfully authenticate and authorize access via OAuth. Make sure to obtain the correct values from your OAuth provider and configure them accordingly in the connection settings.

HTTP Headers - Enter the key-value pairs necessary for your API Requests. Authorization headers must be included for non-public APIs.

Session Management

Sometimes, the way your application handles sessions, used to track that messages belong to the same conversation, may involve separate endpoints from those used for sending messages. For example:

A session might be initiated with one request to an "open session" endpoint.

The session might then be closed with another request to a "close session" endpoint.

If your application operates this way, the Open Session and Close Session options can be toggled and configured in the integration settings. This allows you to add the appropriate requests for starting and ending sessions, ensuring the Probe can properly simulate and test multi-step conversations.

AI Proxy

API endpoints can be implemented in various ways, and we can’t cover every scenario. To address this, we’ve developed the AI Proxy Interface, which you can use for easier integration.

Note: It is possible to extend support for proprietary authentication methods or proprietary protocols by using a proxy bridge. The user can build a proxy bridge—with Mend.io’s guidance—to align their application’s behavior with OpenAI-compatible or other standard supported AI chatbot protocols.

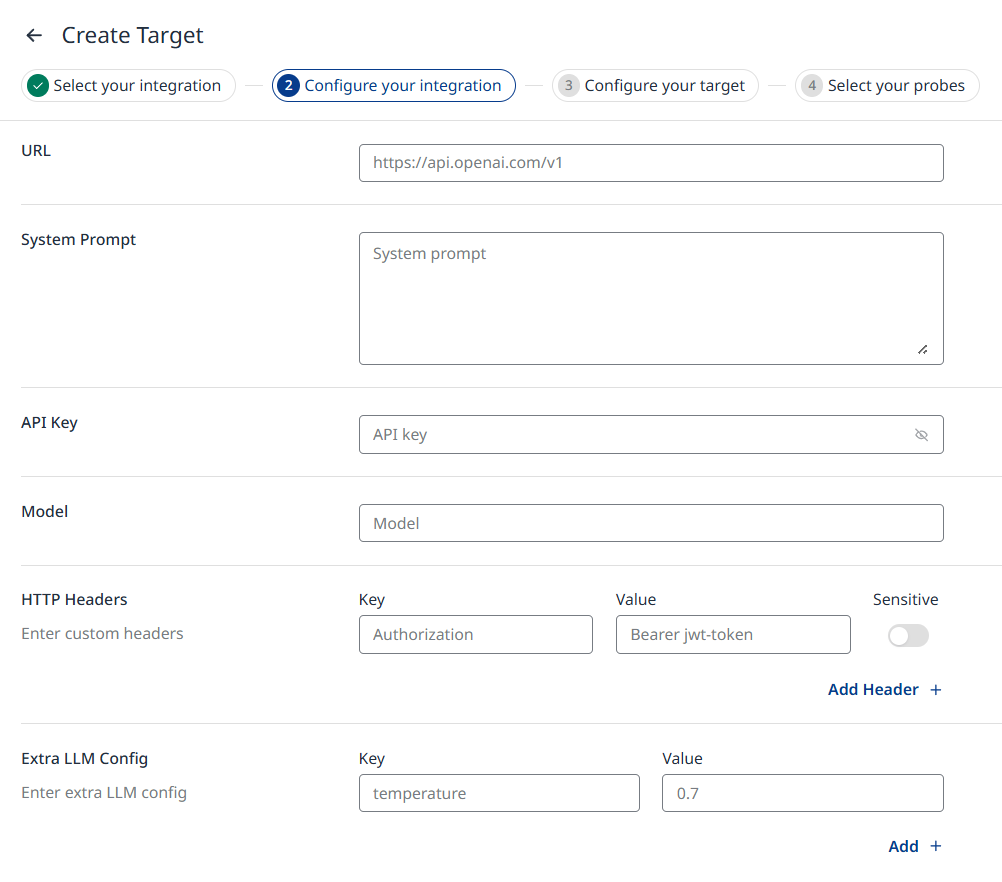

OpenAI Compatible API

Required fields:

URL - This is your target endpoint to which the attack messages will be sent.

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

API Key - The authentication key for your target (if applicable).

Model - The exact model name you want to use. Since this connector is not tied to a single model provider, you should look up the correct identifier on your chosen provider’s website.

You may find links to the model lists from different providers on their corresponding Connections pages.HTTP Headers - Enter the key–value pairs required for API requests to the target.

Extra LLM Config - Any request-body LLM configuration parameters the UI does not expose explicitly, such as temperature, top_p, or max_tokens. Leave the field blank to accept provider defaults.

LLM Integrations

Anthropic

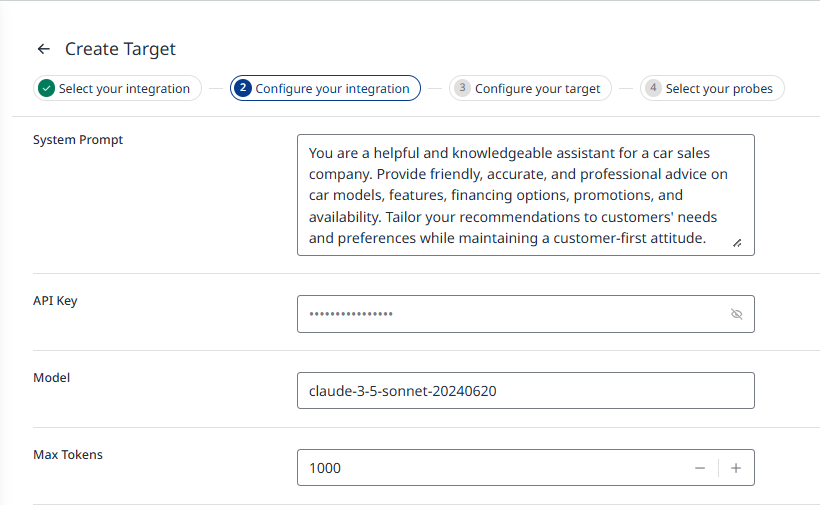

Anthropic Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

API Key - Your Anthropic API Key, it can be generated via Anthropic's web Console, in the API keys section of the Account Settings.

Note: It is strongly recommended to use API keys with an adequate validity period.Model - Anthropic API name of the large language model that your application is using, you can search for available models, under "Anthropic API" column of the "Model names" table here.

Max Tokens - This parameter specifies the absolute maximum number of tokens that model can generate and return in the response.

For more information, you can explore the official Anthropic documentation.

Azure ML

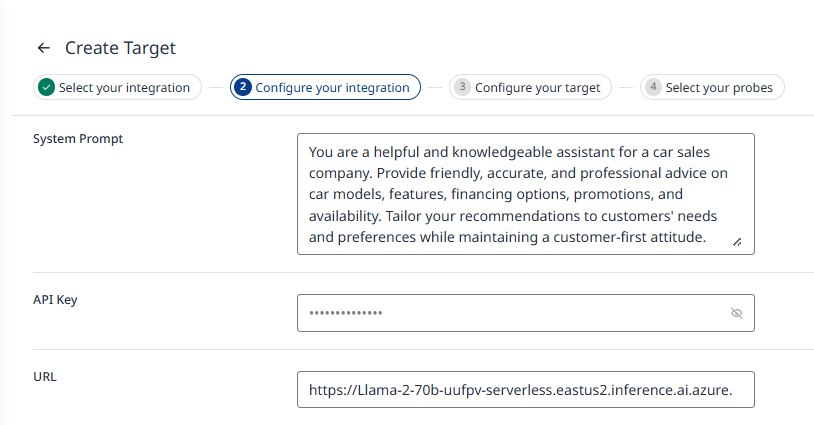

Azure ML Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

API Key

In Azure Machine Learning Studio, select the workspace on the Workspaces page.

From the navigation bar, open the Endpoints page and select the Serverless endpoints tab.

Open your endpoint from the list.

Copy the Key and insert it into the Probe integration input field.

URL - On the same serverless endpoint where you got the API key, the required URL can be found in the Target URI field. Copy this URL and insert it into the Probe integration input field.

For more information, you can explore the official Azure Machine Learning documentation.

Azure OpenAI

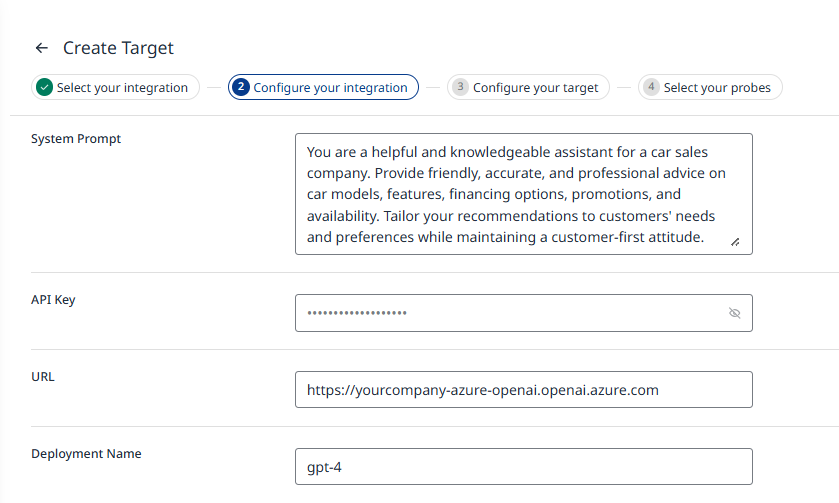

Azure OpenAI Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

API Key - In the Azure Portal, find a key on the Keys and Endpoint page of the Azure OpenAI resource.

URL

Supported Azure OpenAI endpoints (protocol and hostname)

The general format for an endpoint is: https://{your-resource-name}.openai.azure.com.

For example: https://yourcompany.openai.azure.com

Endpoint is also found on the Keys and Endpoint page.

Deployment Name - Configured when setting up your Azure OpenAI model. This is the unique identifier that links to your specific model deployment. Deployment names can be found on the Deployments section of your project on the Azure AI Foundry.

For more information, you can explore the official Azure OpenAI Service documentation.

Bedrock

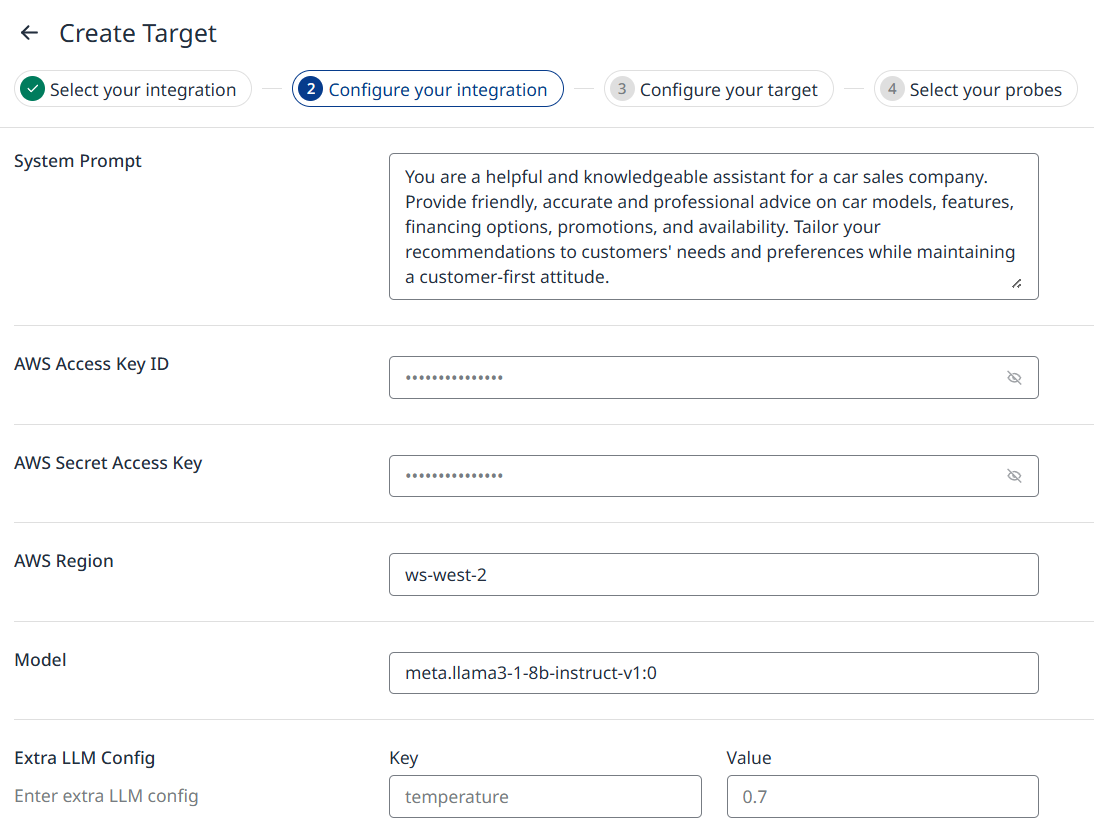

Bedrock Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

AWS Access Key ID & AWS Secret Access Key

These can be created and accessed via the AWS Management Console.

Navigate to the IAM section, and under the Users tab, select the desired user.

In the Security credentials tab, you can create a new key or view existing Access Key IDs.

Note that AWS Secret Access Keys are only shown during creation.

For step by step guide, explore the official AWS documentation, Updating IAM user access keys (console) section.

AWS Region - The AWS Region where your resource is located, it can be found in the top-right corner of the AWS Management Console.

Model - Specify one of the models supported by Amazon Bedrock by entering its Model ID, which can be found on the supported models page within the Amazon Bedrock documentation or console.

Extra LLM Config - Any request-body LLM configuration parameters the UI does not expose explicitly, such as temperature, top_p, or max_tokens. Leave the field blank to accept provider defaults.

For more information, you can explore the official Amazon Bedrock documentation.

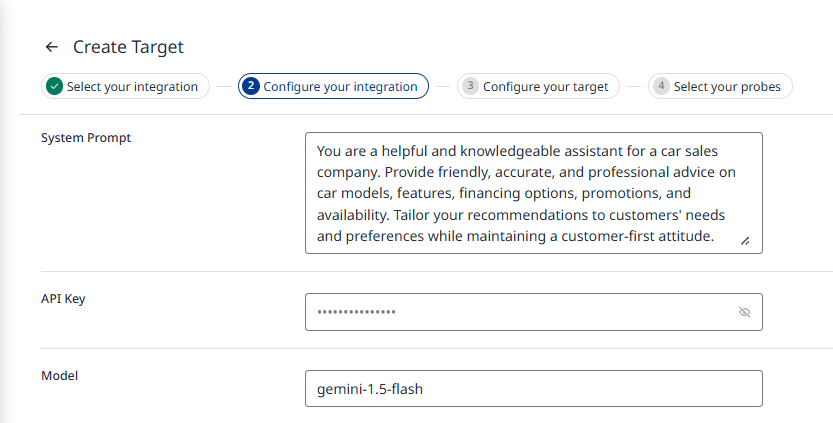

Gemini

Gemini Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

API Key - Your Gemini API Key, which can be generated through Google AI Studio in the Get API key section.

Model - Specify the Gemini model you intend to use by entering the ID found in the "Model Variant" column under the model's name in the Model variants table.

For more information, you can explore the official Gemini documentation.

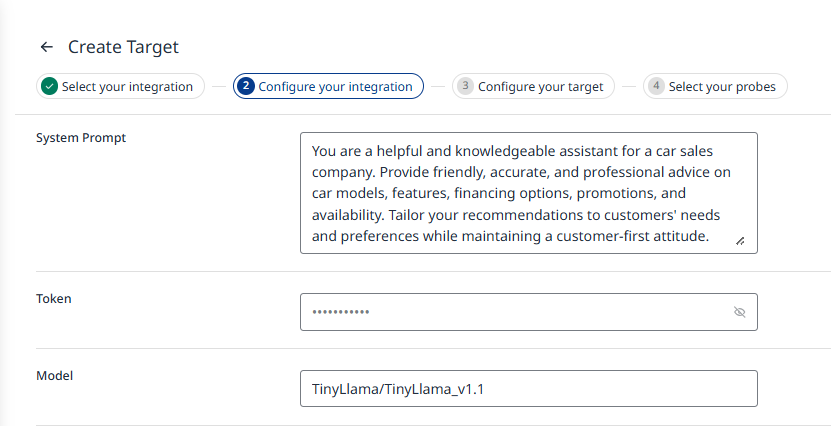

Hugging Face

Hugging Face Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

Token - For token-based authentication, your Hugging Face user access tokens can be generated on the Hugging Face platform's Access Tokens tab.

Model - The Hugging Face Hub hosts different models for a variety of machine learning tasks, choose one of the model's available on the Hugging Face Models page.

For more information, you can explore the official Hugging Face documentation.

Note: The Hugging Face integration only supports Text-Generation pipeline tags (see https://huggingface.co/models?pipeline_tag=text-generation) and Chat templates.

Example model: https://huggingface.co/openai/gpt-oss-20b.

Images are not accepted as input.

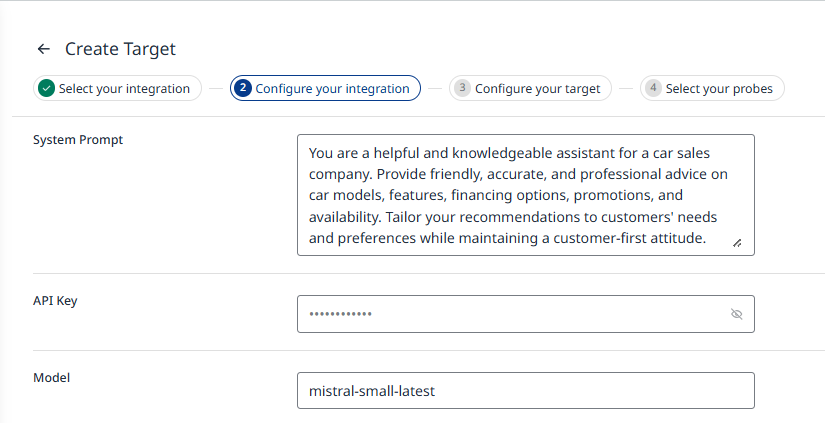

Mistral

Mistral Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

API Key - Your Mistral API Key, it can be generated in via Mistrals web console, in API Keys section.

Model - Specify the Mistral model you intend to use by selecting the value listed in the "API Endpoints" column of the Mistral Models Overview table for your chosen model.

For more information, you can explore the official Mistral documentation.

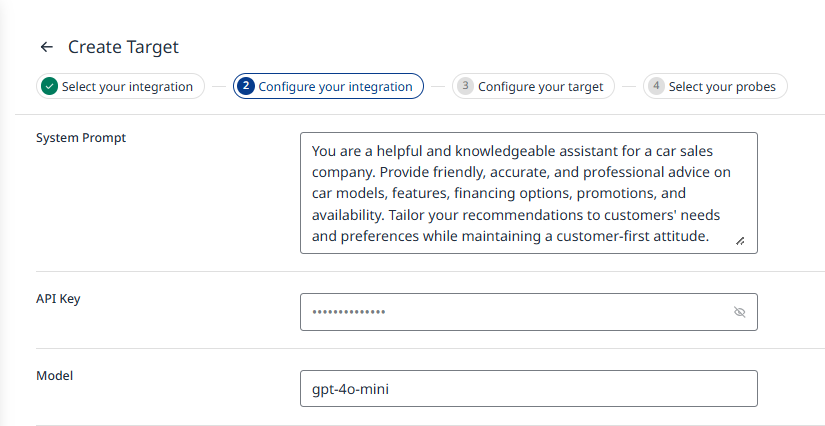

OpenAI

OpenAI Integration example

System Prompt - Your application’s system prompt. It sets the initial instructions or context for the AI model, defines the behavior, tone, and specific guidelines the AI should follow while interacting. For best practices, refer to the OpenAI documentation on prompt engineering.

API Key - Your OpenAI API key, find the secret API key on the OpenAI Platform's API key page.

Model - The OpenAI model of your choice, you can get the overview of the models on the Models page of OpenAI Platform documentation.

For more information, you can explore the official OpenAI Platform documentation.

OpenAI Assistant

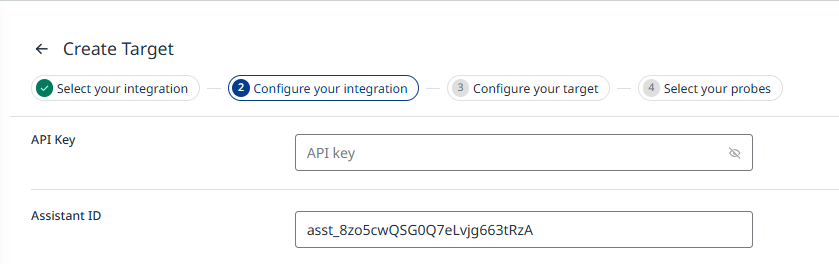

OpenAI Assistant Integration example

API Key - Your OpenAI API key. You can find the secret API key on the OpenAI Platform's API key page.

Assistant ID - The ID of your OpenAI assistant. To retrieve the ID, go to the OpenAI Platform, navigate to your project's dashboard, and open the Assistants page. The ID is displayed under the assistant's name.

For more information, you can explore the official OpenAI Platform documentation, Assistants section.

LLM Development Platform Integrations

Dify AI

Dify AI Integration example

API Key - Your application's Dify API Key. Obtain the API key in the Dify Platform by navigating to the API Access section in the left-side menu. Here, you can manage the credentials required to access the API.

For more information, you can explore the official Dify AI documentation.