System Prompt Risk

Note:

This feature is only available with Mend AI Core or Mend AI Premium.

Contact your Customer Success Manager at Mend.io for more details.This feature is currently an open beta.

Overview

The System Prompt Risk table displays a row for each system prompt detected in the project or application by Mend AI. A system prompt risk is essentially a finding you can manage like any other finding in the platform.

The discovery of system prompts is essential, since system prompts implemented with bad practices expose the AI system to OWASP top 10 for LLM threats like insecure outputs, prompt injection, etc.

By proactively detecting and flagging these interfaces, Mend AI enables you to:

Instantly understand if a component is conversational in nature.

Get a clear remediation path.

Understand which applications or projects contain higher risks, improving governance and visibility.

Prerequisites

A relevant Mend AI entitlement for your organization.

Your organization’s consent to using AI features (via an addendum in your Mend.io contract).

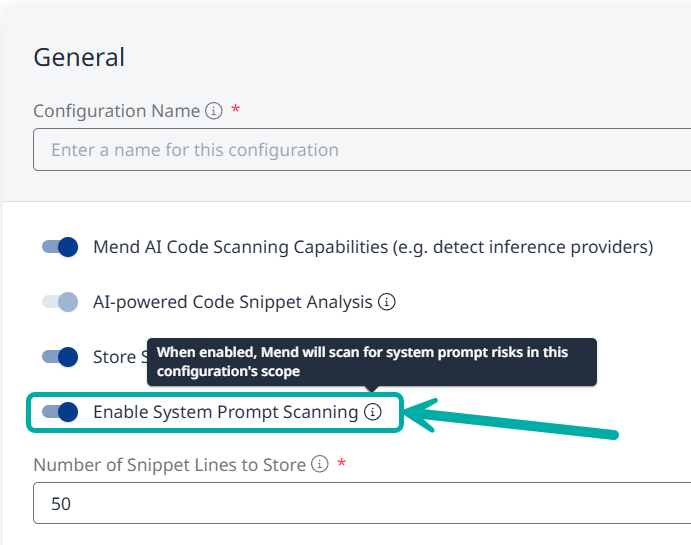

Enable System Prompt Detection

System Prompt Scanning is disabled by default.

As an organization administrator, you can enable it by creating a new AI configuration (or modifying an existing one) via the AI Configuration page and assigning it to a desired scope.

In the General section, toggle Enable System Prompt Scanning on.

When enabled, Mend AI will scan for system prompt risks within the scope of this configuration.

Note: If the toggle is not visible to you, please review the Prerequisites section on this page.

The System Prompt Risk Table

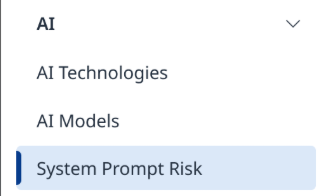

When in the context of an application/project, click the System Prompt Risk button on the left-pane menu:

This will take you to the System Prompt Risk table, listing information about the origin of the system prompt risk, its severity, the aggregated findings associated with it and its risk factors.

Risk Factors

Some system prompts might be flagged using a Conversational Interface chip (![]() ) in the Risk Factors column, indicating the prompt is classified as a conversational AI interface risk. Such interfaces tend to have higher risk exposure due to their fuzzy and open-ended nature.

) in the Risk Factors column, indicating the prompt is classified as a conversational AI interface risk. Such interfaces tend to have higher risk exposure due to their fuzzy and open-ended nature.

The risk factor indicator will help you prioritize such system prompts for mitigation.

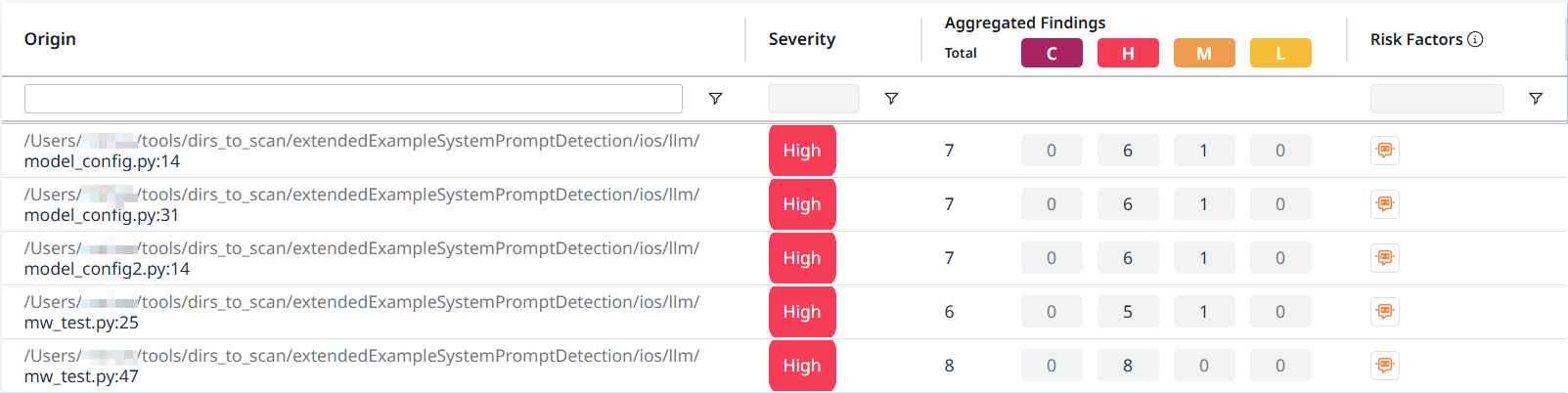

The System Prompt Risk Side-Panel

Clicking anywhere on a row will take you to the side-panel of the system prompt risk.

This side-panel is structured in a similar fashion to other side-panels across the Mend AI-Native AppSec Platform and includes the following information:

The Overview tab

The Overview tab is the default tab. It contains a Description section as well as a Security Overview section, listing information that is also available in the System Prompt Risk Table itself, namely Severity, (Aggregated) AI Findings and Risk Factors.

The System Prompt itself will also be available for you at the bottom of this page.

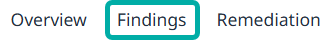

The Findings tab

Switching to the Findings tab will reveal information about the findings associated with the system prompt risk:

AI Weakness - A description of the finding.

Finding Id - The ID of the finding in the database.

Severity - The severity level of the finding.

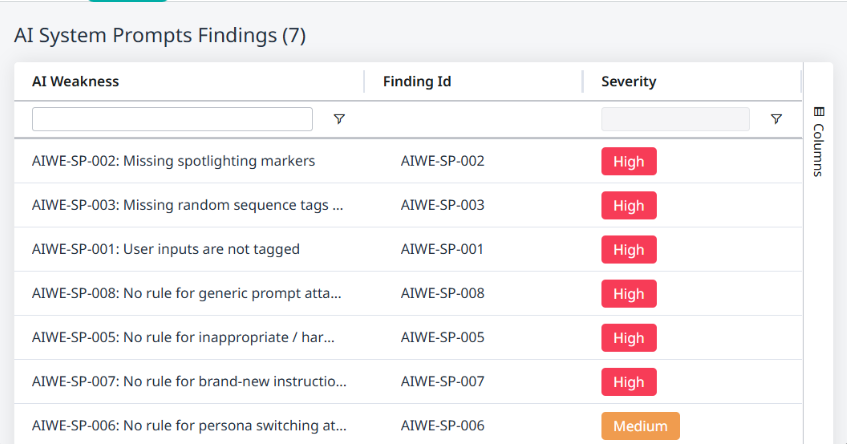

The Remediation tab

The Remediation tab is where Mend AI’s System Prompt Hardening comes into play, allowing you to view the differences between the hardened system prompt and the original, as well as copy the hardened system prompt to the clipboard, to be used in your application.

Toggle Show Changes on to review the changes in the hardened system prompt compared to the original.

Click the Copy button to copy the hardened system prompt to the clipboard

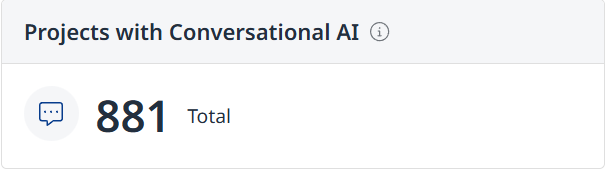

System Prompt Risk in the AI Security Dashboard

The AI Security Dashboard provides a high-level summary of the System Prompt Risk in your organization.

Projects with Conversational AI - Displays the total number of projects in the organization using conversational AI in system prompts.

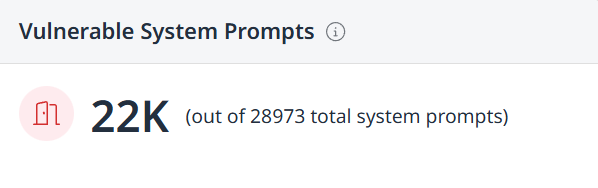

Vulnerable System Prompts - Displays the number of vulnerable system prompts out of the total number of system prompts.

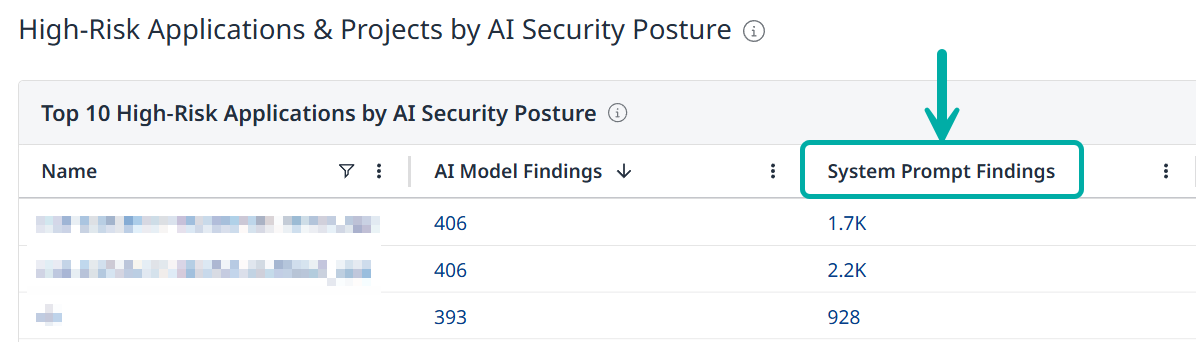

The number of system prompt findings per application or project is displayed in the “High-Risk Applications & Projects by AI Security Posture” tables.

System Prompt Risk APIs

System Prompt data are also available to you via API. Refer to the the API documentation for more information.

Project-level endpoints:

https://api-docs.mend.io/platform/3.0/findings-project/getprojectimplementationfindings

https://api-docs.mend.io/platform/3.0/findings-project/getimplementationsnippet

Application-level endpoints:

Global endpoints:

AIWE Coverage

AI-WE | Category | OWASP LLM Category | Title | Description |

AIWE-SP-001 | System Prompt Weaknesses | LLM01 Prompt Injection | User inputs are not tagged | Untrusted user text is concatenated with system instructions without clear delimiters, allowing prompt‑injection payloads to masquerade as control instructions. (Context: subcategory: Input Validation, threat type: Prompt Injection) |

AIWE-SP-002 | System Prompt Weaknesses | LLM01 Prompt Injection; LLM07 System Prompt Leakage | Missing spotlighting markers | The system does not spotlight user‑supplied text with a unique sentinel, enabling attackers to smuggle instructions into free‑form content. (Context: subcategory: Input Validation, threat type: Prompt Injection) |

AIWE-SP-003 | System Prompt Weaknesses | LLM01 Prompt Injection; LLM07 System Prompt Leakage | Missing random sequence tags around the trusted system block | Trusted system block lacks a per‑session random "sandwich" wrapper, making it easier for adversaries to fingerprint and leak the prompt. (Context: subcategory: System Isolation, threat type: Prompt Injection) |

AIWE-SP-005 | System Prompt Weaknesses | LLM01 Prompt Injection | No rule for inappropriate/harmful user content | No explicit policy guard‑rail against hateful, violent or self‑harm content. (Context: subcategory: Content Filtering, threat type: Policy Bypass) |

AIWE-SP-006 | System Prompt Weaknesses | LLM01 Prompt Injection; LLM07 System Prompt Leakage | No rule for persona switching attempts | A model can be coerced to change persona or role, undermining access controls. (Context: subcategory: Identity Protection, threat type: Persona Switch) |

AIWE-SP-007 | System Prompt Weaknesses | LLM01 Prompt Injection | No rule for brand-new instructions received from the user | Conflicting instructions are processed without hierarchy, causing non‑deterministic behavior. (Context: subcategory: Instruction Override, threat type: Instruction Injection) |

AIWE-SP-008 | System Prompt Weaknesses | LLM01 Prompt Injection; LLM05 Improper Output Handling; LLM07 System Prompt Leakage | No rule for generic prompt attacks | Critical system commands are in clear text and can be spoofed. (Context: subcategory: Attack Prevention, threat type: Prompt Attack) |

AIWE-SP-018 | System Prompt Weaknesses | LLM02 Sensitive Information Disclosure | Secrets embedded inside the system prompt | Secrets (API keys, connection strings) are hard‑coded in the system prompt. (Context: subcategory: Information Disclosure, threat type: Sensitive Data Exposure) |

Limitations

Limited support for the detection of single-line system prompts.

Partial support for prompts constructed through string concatenation.

For example, prompts assembled across multiple variables or split strings.Detection currently supports English-language prompts only.