The Shadow AI Report

Overview

With Mend AI, you can generate an awareness report (Shadow AI report) which provides a detailed map of AI usage across the organization.

Getting It Done

Prerequisites

Your Mend organization has an LLM entitlement

Your Mend organization has access to the Mend Platform to view the results

Scan your dependencies with the Mend CLI SCA

To run a scan and generate results using the new AI BoM report capability, follow the steps outlined in our Scan your open source components (SCA) with the Mend CLI documentation for initiating a scan. Once the scan is completed, you can view the AI Technologies embedded in the BoM report in the Mend Platform to analyze the results.

View the results in the Mend Platform

You can access the Shadow AI report on two levels:

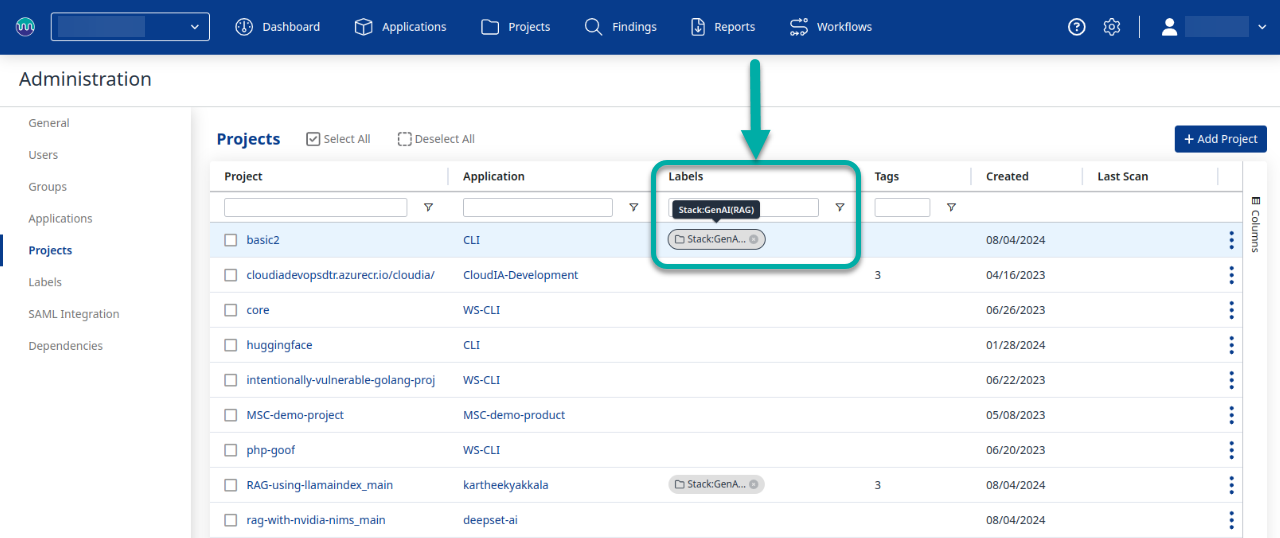

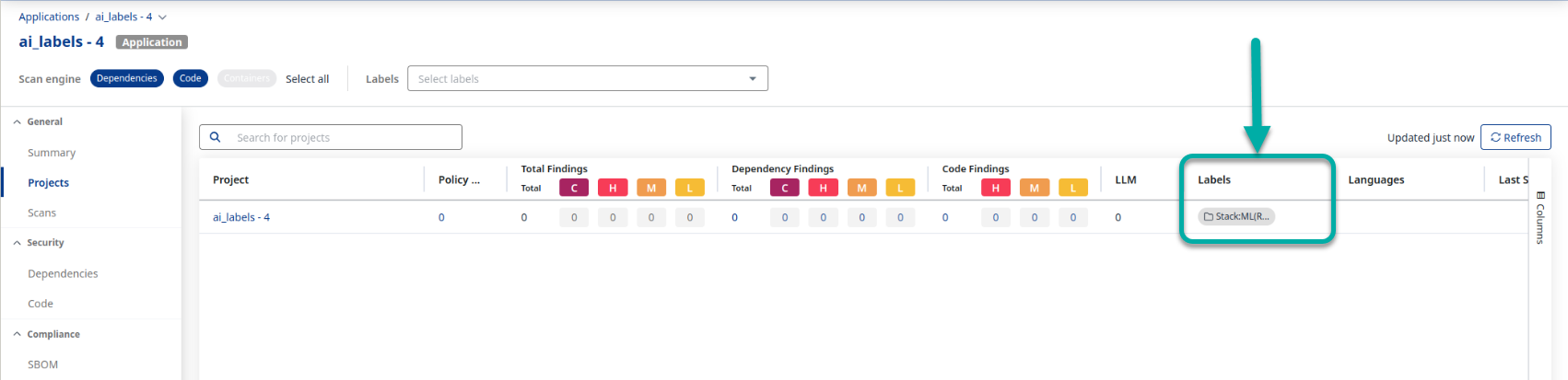

Project - a project that is identified to contain AI technologies will carry dedicated Shadow AI labels

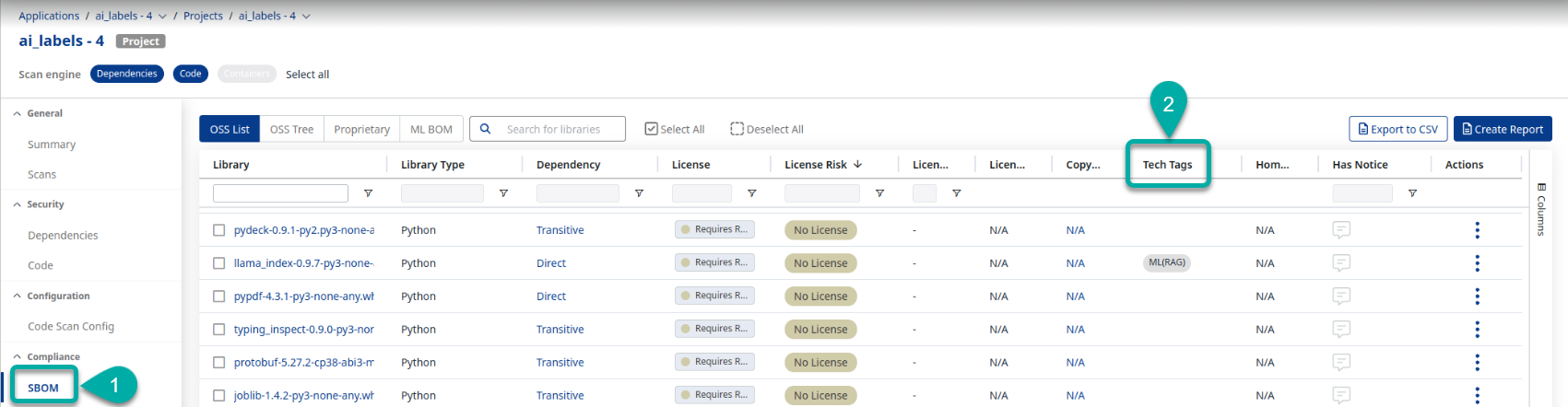

Project Libraries - in a project SBOM there is an added Tech tag on each library that belongs to a group of libraries that make up an AI technology.

To view Shadow AI project labels, navigate to an application’s project list in the Mend Platform.

Next, in order to view the libraries of a Shadow AI labeled project, it is required to choose a labeled project and navigate to the SBOM view. In the SBOM view, any library that was identified as part of the Shadow AI label will have a similar indication to the project label in the Tech Tags column:

Note that a project may be labeled with multiple Shadow AI labels, and a library may have multiple Tech Tags.