View your Results and Create Reports

Note:

Mend AI is available as part of the Mend AppSec Platform.

Some features require a Mend AI Core or Premium entitlement for your organization.

Contact your Customer Success Manager at Mend.io to learn about enabling Mend AI.

Overview

This document provides an overview of Mend.io’s detection capabilities surrounding the landscape of Large Language Models (LLMs). It details this feature's architecture, functionality, and integration.

Mend AI identifies open-source models hosted on Kaggle or Hugging Face and enables you to issue vulnerability reports or malicious component (MSC) reports based on our detection capabilities and community reports.

Mend AI scans repository data released on Hugging Face to detect potential malicious or unwanted components present in those repositories. It can also scan models in a file system to identify vulnerabilities, licensing issues, and malicious models. Key features of file-system scanning include:

Known Vulnerability Identification: Detects known vulnerabilities in models.

New Vulnerability Identification: Scan models for serialization vulnerabilities.

License Compliance: Ensures models comply with licensing requirements.

Malicious Model Detection: Identifies and mitigates risks from malicious models.

Getting it done

View your Model Detection Results in the Mend Platform UI

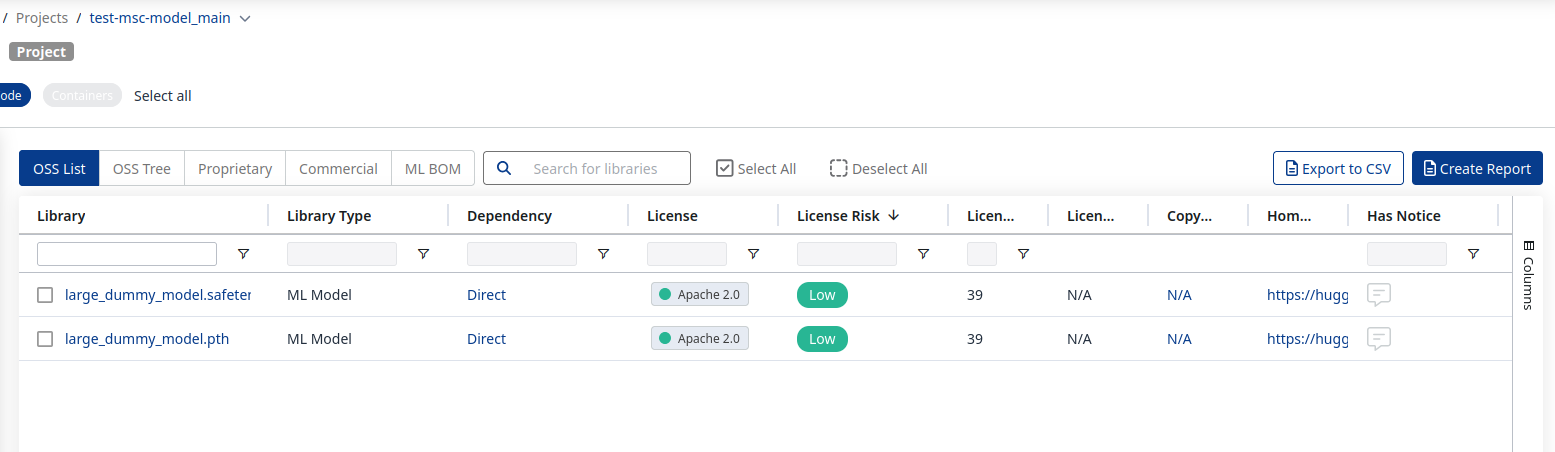

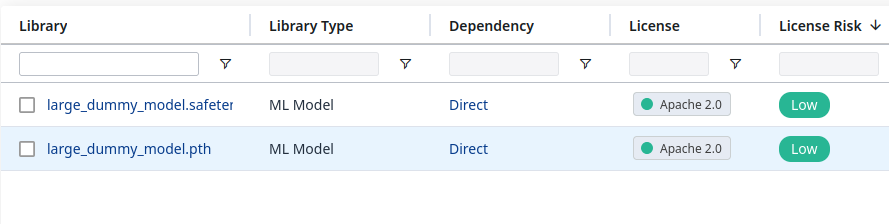

At present, Mend AI can detect locally-stored models originating from Hugging Face and Kaggle and recognize them within users' software. After scanning, data about the detected models will be available in the SBOM (Software Bill of Materials), and the scan results will be displayed in the Mend Platform, as demonstrated below:

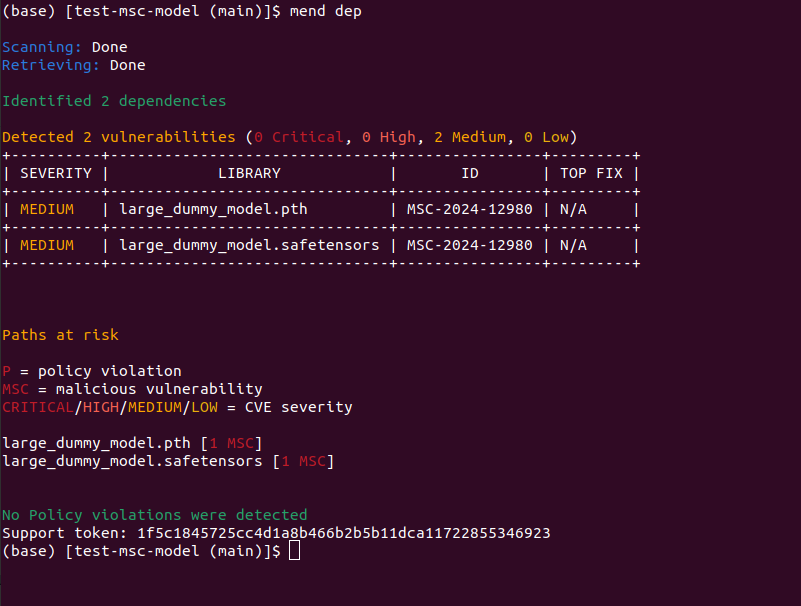

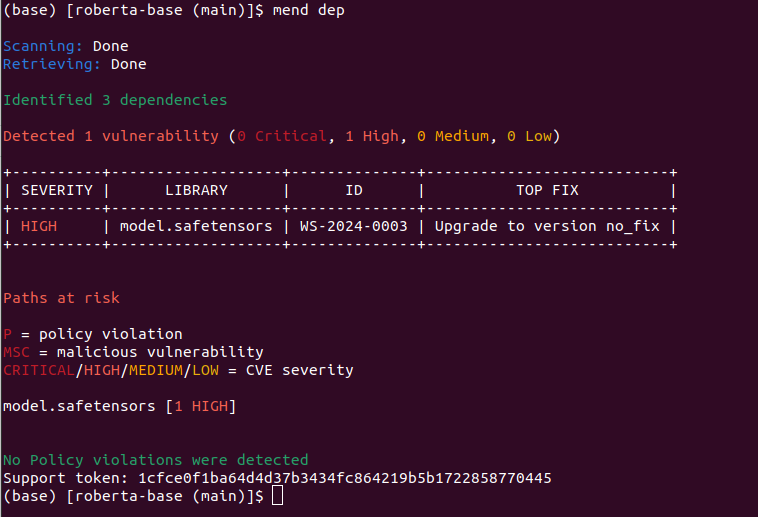

View your Model Detection Results in the Mend CLI

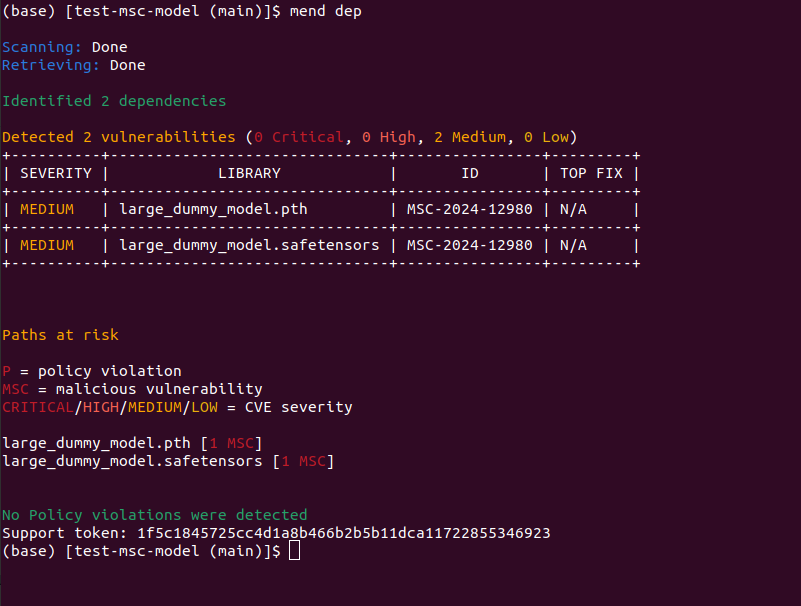

The scan results are immediately visible in the CLI itself:

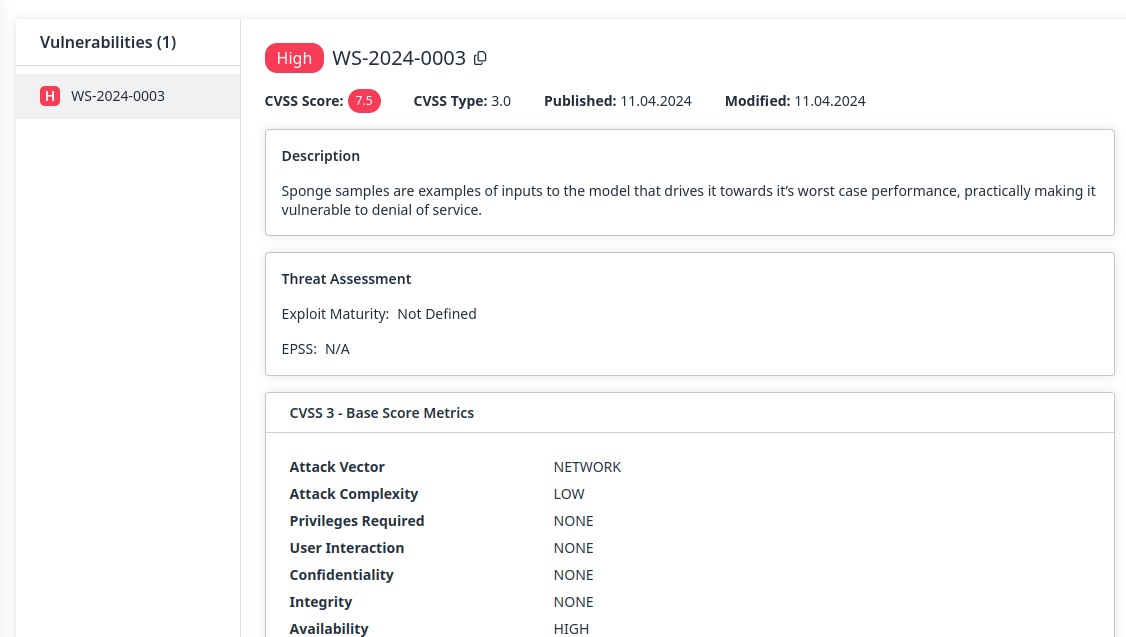

Vulnerability Reporting

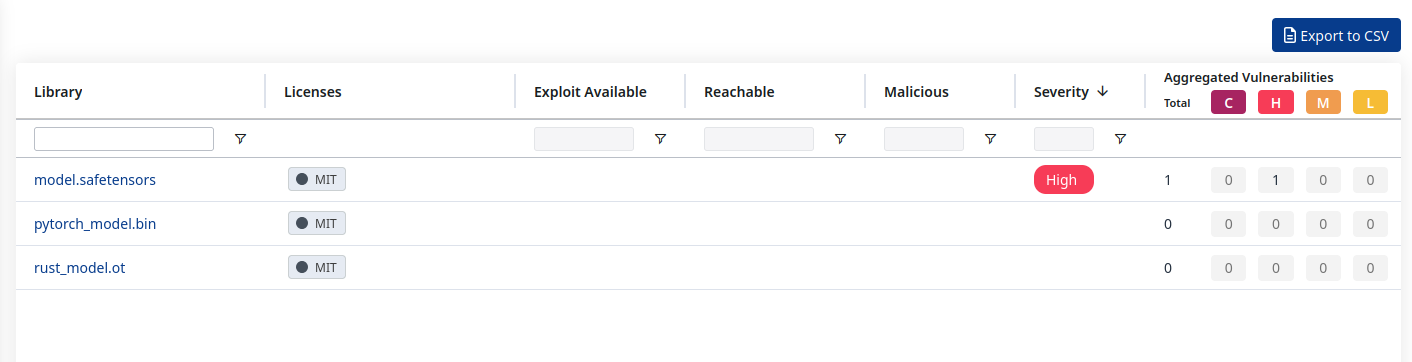

Using Mend AI, you can issue vulnerability reports for models recognized from the OSS space mentioned. Models scanned with the Mend CLI are added to the inventory, and their vulnerabilities are visible in the UI.

In the example below, you can see an OSS model with a known vulnerability being reported (https://huggingface.co/FacebookAI/roberta-base/tree/main ):

Note: These types of vulnerabilities do not differ from vulnerabilities detected in other OSS components.

Model-related vulnerabilities are also displayed in the CLI scan result:

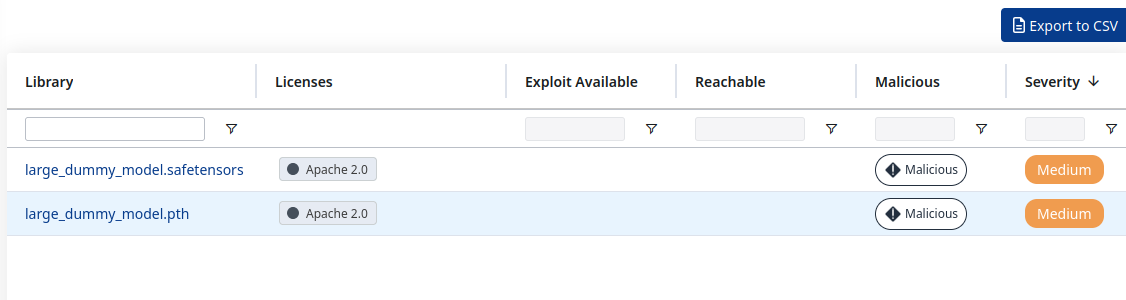

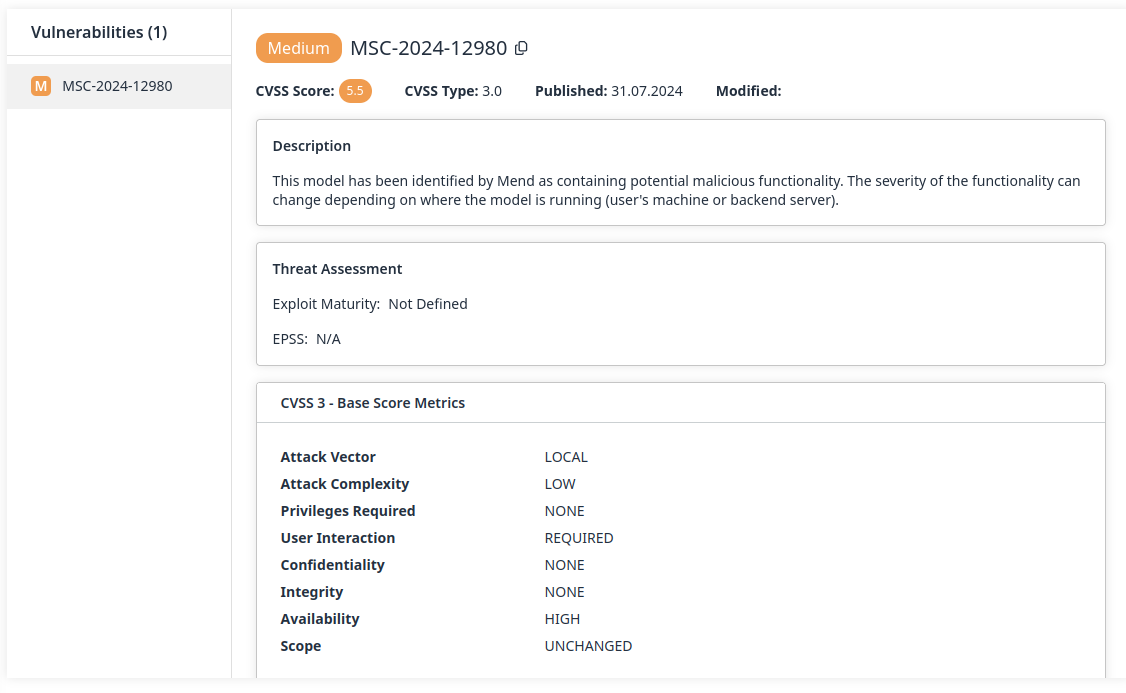

Malicious Reporting

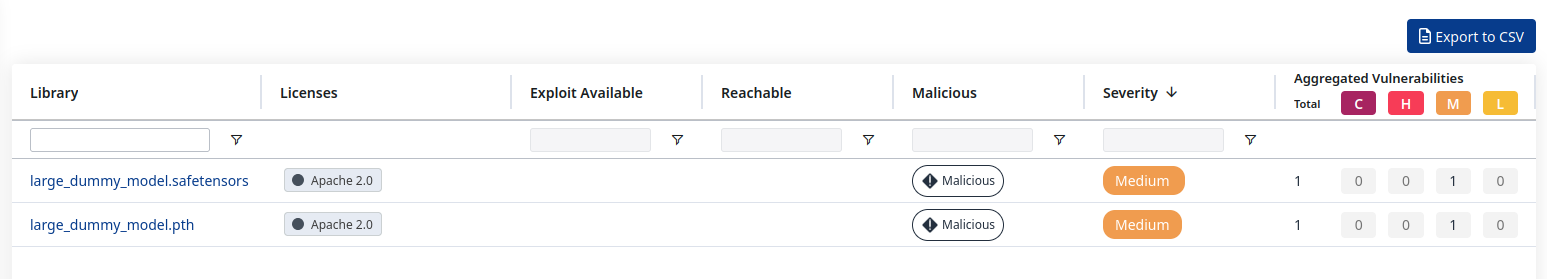

Similarly to the above solution, Mend AI detects malicious components in model repositories.

Similarly to the vulnerabilities, Mend AI also handles malicious components, as demonstrated below:

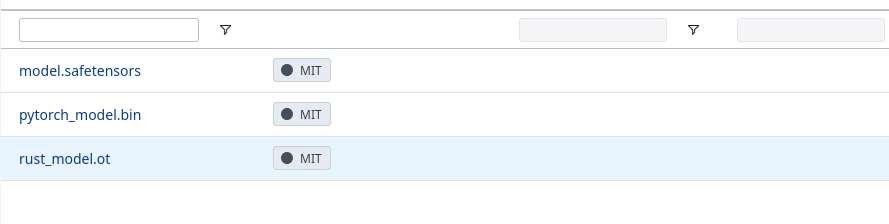

License Reporting

In addition to all the security-related features, Mend AI also provides license details for recognized models, as demonstrated below:

71 licenses used within the Hugging Face space have been detected: https://huggingface.co/docs/hub/repositories-licenses