Hugging Face Unsafe Models

Overview

Companies rely on Hugging Face, employing scanners such as Pickle Scan and Protect AI for vulnerability information, however many reported vulnerabilities might be unverified or false positives, leading to uncertainty in decision-making. By validating vulnerabilities independently and exposing their statuses in the Mend AppSec Platform, Mend AI provides a more accurate, security-driven assessment of model risk.

Mend AI’s Analysis of Hugging Face Models

Mend AI verifies Hugging Face’s tagging of unsafe models, providing a clear indication whether a model deemed by Hugging Face as unsafe is indeed unsafe or, alternatively, a false-positive.

Model Safety Statuses

There are four Hugging Face model safety statuses in the Mend AppSec Platform UI. Two of the statuses, “False-Positive” and “Confirmed Unsafe”, are assigned as part of the Mend AI Research team’s analysis of the model in question. The other statuses, “No Findings” and “Unconfirmed Unsafe”, can evolve into one of the aforementioned statuses.

No Findings – No known vulnerabilities detected.

False-Positive – Safe, i.e., reported by Hugging Face as unsafe but refuted by the Mend AI Research team.

Confirmed Unsafe – Unsafe, i.e., reproduced and verified by the Mend AI Research team.

Unconfirmed Unsafe – Suspected Unsafe, i.e., tagged by Hugging Face as unsafe, but not reviewed by the Mend AI Research team.

The Mend AI Model Selection Criteria

The criteria are based on three key parameters:

The presence of a "Suspected Unsafe" tag.

The model popularity, based on download count.

The "Suspected Unsafe" tag referencing files other than

training_args.bin(a file used exclusively for fine-tuning and not loaded during the standard model loading process).

The Mend AI Investigation Process

The technical investigation is comprised of the following:

An automated analysis using a Mend.io proprietary scanning tool.

A manual code review by Mend.io Researchers, to determine whether genuine malicious code exists within the model and whether it poses an actual risk to users.

The investigation process can yield one of two statuses:

If vulnerabilities are found in the model, its status changes to Confirmed Unsafe.

If the model is deemed safe, its status changes to Known False Positive.

Example

When the Mend AI Research team detects potentially suspicious code elements such as builtins.getattr(), they thoroughly examine the implementation context. Based on the findings, they assign either a Confirmed Unsafe status if malicious intent is confirmed, or a Known False-Positive status if the code is determined to be benign.

Unsafe Models in the Mend Platform UI

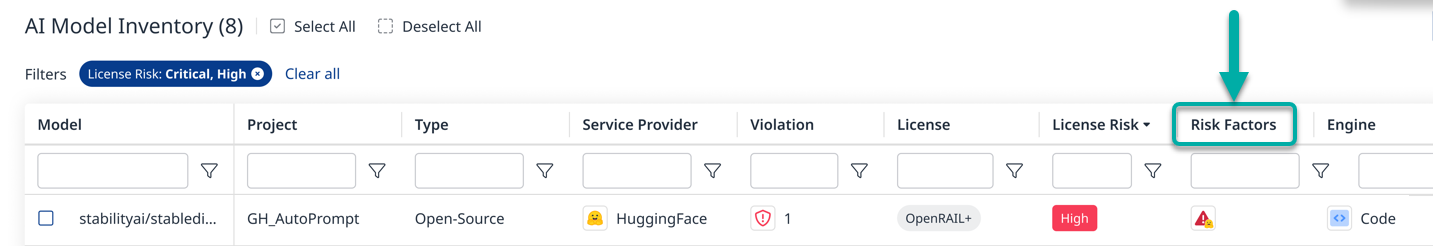

The model statuses will be visible to you in the Risk Factors column of the AI Models table.

Note: A License Risk column is also available in this table, providing compliance-related risk information about the model in question.

Examples of Verified Unsafe Models

Model | File Path | Message | Verification |

|---|---|---|---|

drhyrum/bert-tiny-torch-vuln | pytorch_model.bin | This file contains imports that may be unsafe. | Verified unsafe - open bowser to https://pramuwaskito.org/hacker |

ykilcher/totally-harmless-model | pytorch_model.bin | This file contains imports that may be unsafe. | Verified unsafe - opens a webrowser upon loading |

mkiani/gpt2-eval | pytorch_model.bin | This file contains imports that may be unsafe. | Verified unsafe - runs ls command |

mkiani/gpt2-system | pytorch_model (1).bin | This file contains imports that may be unsafe. | Verified unsafe - tend to run malicious.py file |

mkiani/gpt2-system | pytorch_model.bin | This file contains imports that may be unsafe. | Verified unsafe - tend to run malicious.py file |

mkiani/gpt2-runpy | pytorch_model.bin | This file contains imports that require your attention. Click on the pickle button to get more detail. | Verified unsafe- open browser and print malicious code |

mkiani/gpt2-exec | pytorch_model.bin | This file contains imports that may be unsafe. | Verified unsafe - runs debugger "pdb.set_trace()" |

sheigel/best-llm | pytorch_model.bin | This file contains imports that may be unsafe. | Verified unsafe open browser and prin a stetment) |

sheigel/best-llm | pytorch_model_v2.bin | This file contains imports that may be unsafe. | Verified unsafe open browser and prin a stetment) |

omershelef/mytest-omer | mytest.pth | This file is vulnerable to threat(s) PAIT-PYTCH-100. | Verified unsafe - prints a message into an image |

omershelef/mytest-omer | resnet18-f37072fd.pth | This file is vulnerable to threat(s) PAIT-PYTCH-100. | Verified unsafe - prints a message into an image |

Examples of Safe Models and False Positives

Model | File Path | Message | Verification |

|---|---|---|---|

Bingsu/adetailer | This file contains imports that may be unsafe. | Safe | |

anusha/t5-base-finetuned-wikiSQL-sql-to-en | This file contains imports that require your attention. Click on the pickle button to get more detail. | Safe (False-Positive) |