Discovery of AI Models and Inference Providers

Note:

Mend AI is available as part of the Mend AppSec Platform.

Some features require a Mend AI Core or Premium entitlement for your organization.

Contact your Customer Success Manager at Mend.io to learn about enabling Mend AI.

Overview

The following article explains how to utilize Mend AI’s discovery of Third-Party AI Models and Providers in your project/application inventory, in the Mend AppSec Platform.

Getting it done

Prerequisites

A Mend AI entitlement for your organization.

Mend AI discovers third-party AI models automatically as part of an SCA CLI (

mend dep/mend sca) or repository SCA scan (in supported repository integrations), however it uses a separate scanner, which must be enabled for automatic AI discovery to take place as part of your SCA scans.

To run an SCA scan, please follow the steps in this article.

The Steps

Step 1 - Navigate to the application you wish to review under Applications.

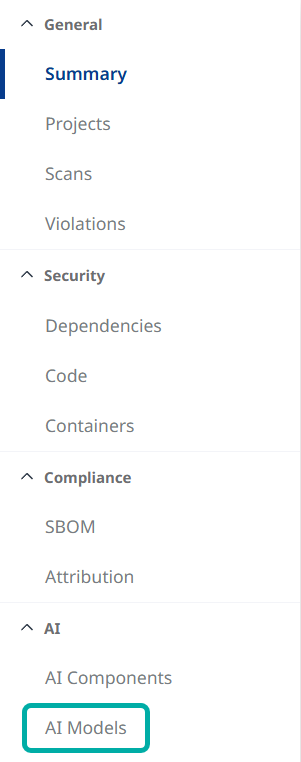

Step 2 - Once in the desired application, select AI → AI Models from the left-pane menu.

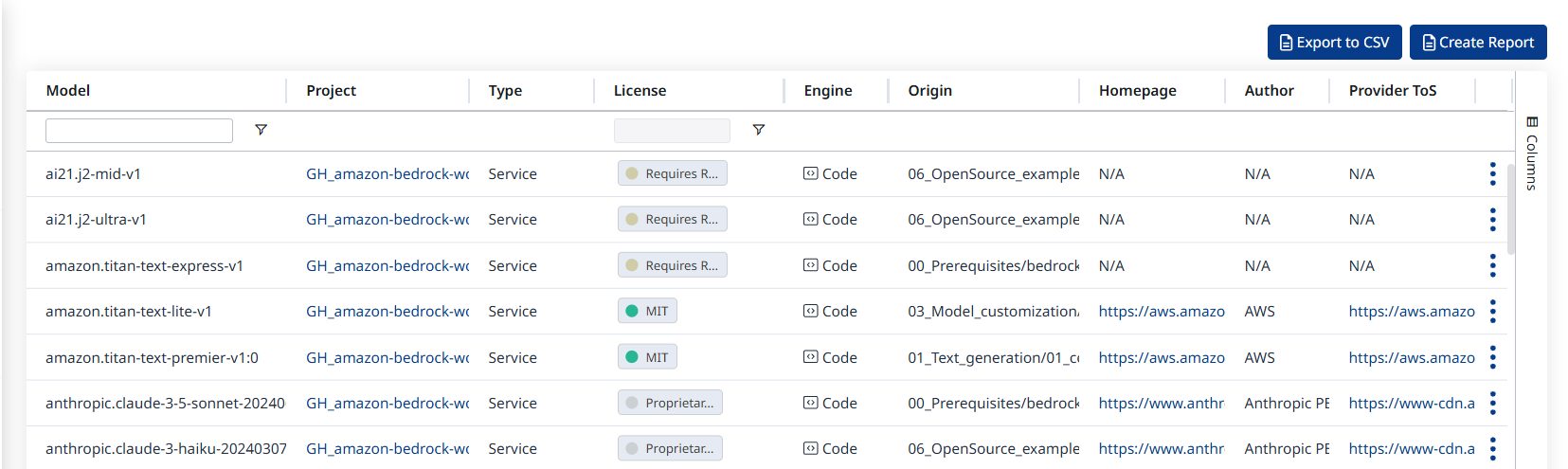

This will take you to the AI Model Inventory table, containing a list of Models, accompanied by additional information such as the number of Projects in the application that use the model in question, the model Category, Provider, License, Origin and more.

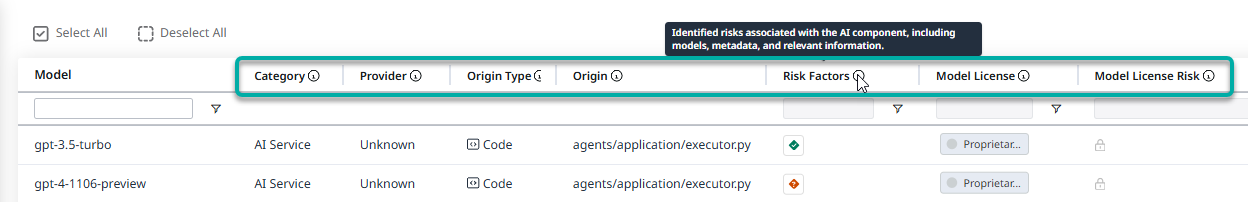

Note: Some column headers have tooltips (marked by![]() ) containing a comprehensive explanation about the column:

) containing a comprehensive explanation about the column:

The Risk Factors tooltip

Category: Indicates whether the AI model is provided as a inference provider (AI Service) or runs locally (Self-Hosted).

Provider: If the model is not self-hosted, this refers to the external company that provides inference services and API access for the AI model.

Origin Type: The detection mechanism used to identify the component. Possible values:

code - component was detected through source code scanning.

artifacts - component was detected in AI artifacts during static or dynamic analysis.

Origin: The location or path where the component was discovered, indicating its source in the system or project.

Risk Factors: Identified risks associated with the AI component, including models, metadata, and relevant information.

Model License: Specifies the model’s licensing type. If open-source, it includes details about the license (e.g., MIT, Apache). Not applicable for proprietary or closed-source models.

Model License Risk: Indicates the level of risk associated with the model’s license. The risk level is assessed by Mend.io’s research team based on the license terms, compliance obligations, and potential restrictions on usage, redistribution, or modifications.

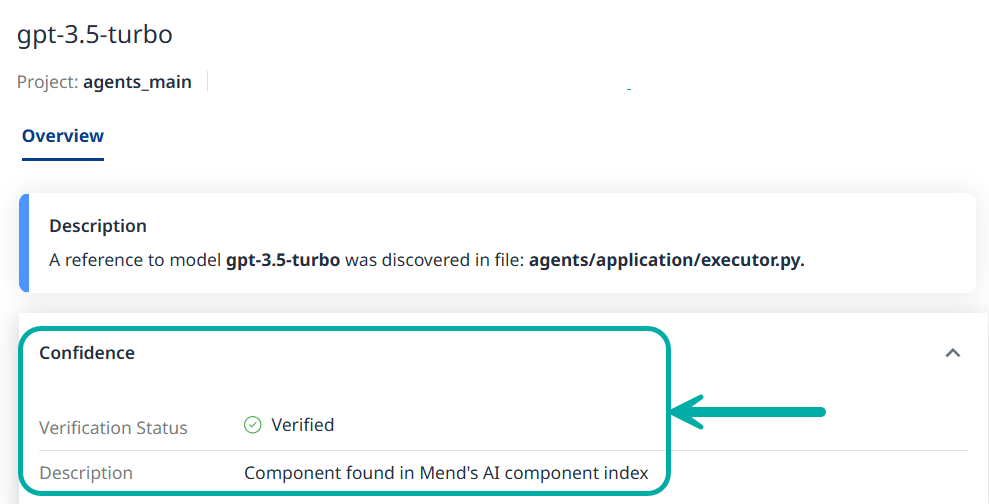

Verified by Mend:

✅ Verified: Component found in Mend's AI components index.

🔵 Not Verified: Not found in Mend's index.

Note that clicking the value in this column will open the side-bar which displays this information in the Confidence section. See example below.

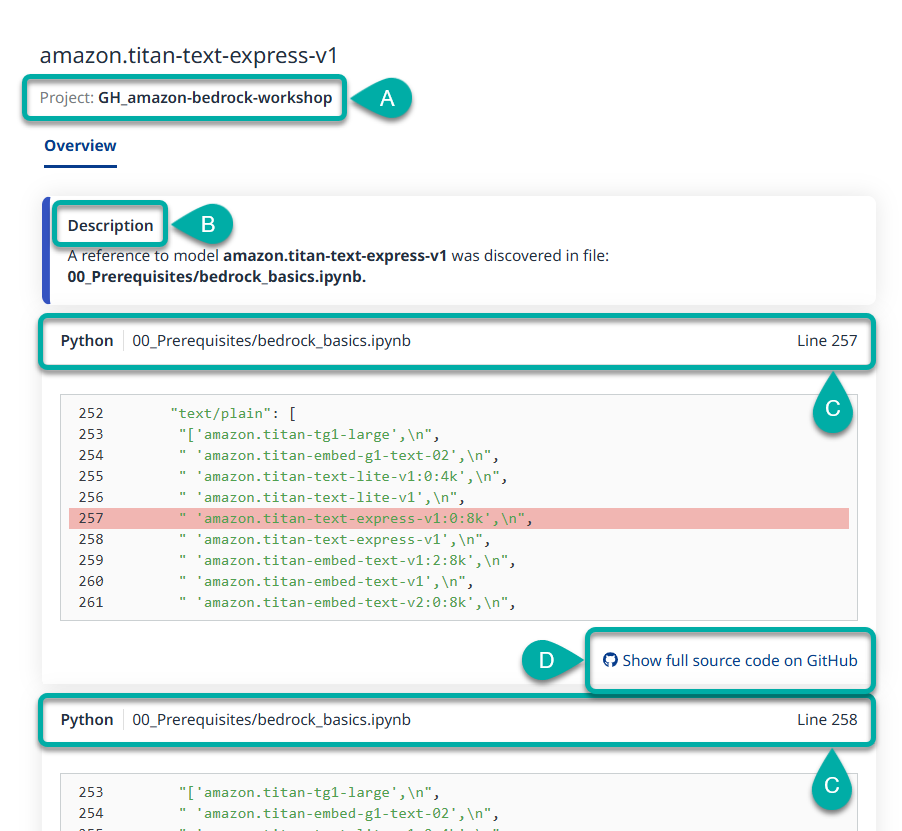

Step 3 - Click a desired finding in the AI Model Inventory table. This will take you to its Finding Details window, where you can get an overview of the finding:

The Overview tab will, among other things:

A. List the Project(s) in which the finding was detected.

B. Provide a Description of the finding.

C. Show you the relevant Lines in the code.

D. Allow you to review the source code in GitHub, by clicking “Show full source code on GitHub”.

Limitations

For open-source models, Mend AI covers Hugging Face and Kaggle.

For closed source models accessed through inference providers, Mend AI covers models that can be served from OpenAI, Mistral, Anthropic and DeepSeek.

Google Vertex and Azure Foundry are planned to be added soon.New Hugging Face and Kaggle models are expected to be available in Mend AI within 3 hours.

Hugging Face models which do not contain files larger than 10 MB in the repository are not supported. This is because such models are expected to generate unnecessary noise.

Supported Providers and Closed-Source Models via Pattern-Based Matching

Amazon Bedrock

APIs:

AWS Bedrock

Model Families:

nova, titan

Notes:

Current generation models are supported (e.g., Titan v1).

Anthropic

APIs:

Anthropic API, AWS Bedrock, Google Vertex AI, suspected generic Anthropic-compatible APIs

Model Families:

claude-1.0, claude-1.1, claude-1.2, claude-1.3, claude-2.0, claude-2.1, claude-instant, claude-3-opus, claude-3-haiku, claude-3-sonnet, claude-3.5-sonnet, claude-3.5-haiku

Notes:

Versions are tracked by model generation (e.g., Claude 2, Claude 3).

Azure AI Foundry

DeepSeek

APIs:

DeepSeek API, AWS Bedrock, suspected generic DeepSeek-compatible APIs

Model Families:

deepseek-math, deepseek-coder, deepseek-coder-v2, deepseek-chat-v3, deepseek-v2, deepseek-vl, deepseek-r1, deepseek-llm

Notes:

We support detection across major v1, v2, and v3 families.

Google Vertex AI Model Garden

Mistral AI

APIs:

Mistral API, AWS Bedrock, Google Vertex AI, suspected generic Mistral-compatible APIs

Model Families:

mistral-7b, mistral-small, mistral-medium, mistral-large, mistral-nemo, mistral-embed, mistral-moderation, ministral-3b, ministral-8b, ministral-small, mixtral-8x7b, mixtral-8x22b, pixtral-large, pixtral-12b, codestral

Notes:

Major model versions and embedding models are supported.

OpenAI

APIs:

OpenAI API, suspected generic OpenAI-compatible APIs

Model Families:

gpt-4o, gpt-4, gpt-4-turbo, gpt-3.5-turbo, gpt-4o-mini, dall-e-2, dall-e-3, davinci, babbage, tts-1, whisper-1, omni-moderation, o1, text-embedding-ada-002, text-embedding-3-large, text-embedding-3-small, chatgpt-4o

Notes:

Multiple versions are supported, including official releases, previews, and date-tagged variants.

watsonx.ai

APIs:

watsonx.ai

Model Families:

Granite, Llama, Mistral AI

Note: Generic API Detection

When providers expose models over third-party, proxy, or unofficial APIs, Mend AI uses behavioral and metadata analysis to detect suspected generic usage for OpenAI, Anthropic, Mistral AI, and DeepSeek models.