AI Technologies Inventory

Overview

A technology framework refers to any technology used by a piece of software. This can include a product, a category of products, a protocol, or any other indicator that helps us understand the software's usage (e.g., AI, LLM, RPC, payments).

An AI framework is essentially a group of AI technologies, listed as High-Level Technology in the Mend AI user interface. It includes tools, libraries, and methodologies for developing, deploying, and managing AI models. Examples include model frameworks (e.g., TensorFlow, PyTorch), AI agent orchestration tools (e.g., LangChain, LlamaIndex), and security frameworks that enforce governance and compliance (e.g., Guardrails AI, TruLens).

In Mend AI, framework identification detects and categorizes AI-related frameworks in enterprise codebases, helping security teams assess risk and enforce governance policies.

The data containing the related package evidence will be available on the AI Technologies inventory page.

Getting it done

To access your AI Technologies inventory, navigate to the AI section on the left-pane navigation menu and click AI Technologies.

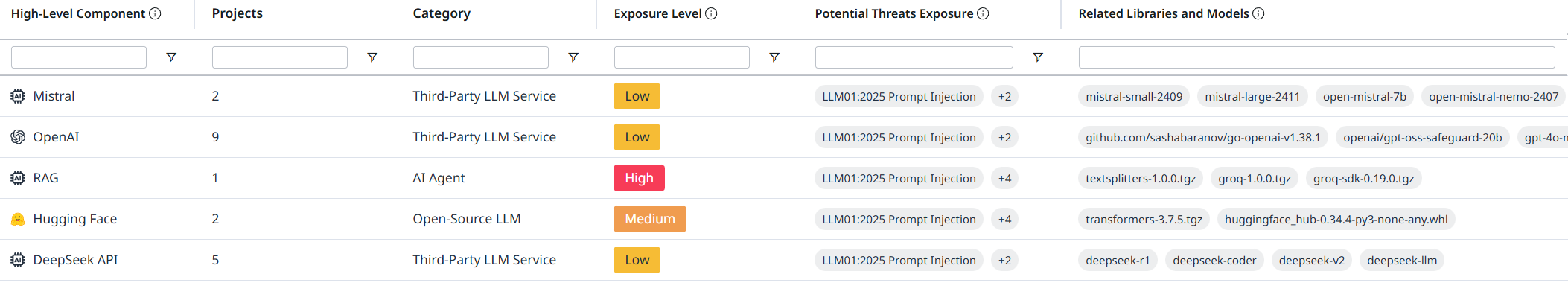

This will take you to the AI Technologies inventory table, which contains the columns listed below.

Table Columns

High-Level Component denotes a high-level AI infrastructure technology that consists of a group of related AI elements, including libraries, tools, and environments used for model development and deployment.

Projects in which the high-level technology was detected. By default, this column specifies the number of projects in which the technology was detected. Clicking the number will take you to the project list.

Category of the high-level technology (e.g., Third-Party LLM Service, AI Agent, Open-Source LLM, etc.).

Exposure Level is the level of risk associated with the AI technology, assessed by Mend.io’s research team. It considers factors like security vulnerabilities, compliance issues, and governance risks. Categories include Low, Medium, High, and Critical based on Mend.io’s evaluation.

Potential Threats Exposure lists the specific threats related to the AI technology, mapped from the OWASP Top 10 for LLM Applications. These may include prompt injection, model poisoning, insecure output handling, excessive agency, or training data extraction risks.

Related Libraries and Models are components within the AI infrastructure that relate to the application, such as packages, models, and inference providers.

Note:

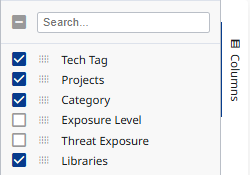

1. Click the Columns button at the right edge of the screen to add/remove columns.

The columns are all filterable. For instance, you can use the filter box under the High-Level Technology column header to filter the results by the technology name.

Some column headers have tooltips (marked by

) containing a comprehensive explanation about the column.

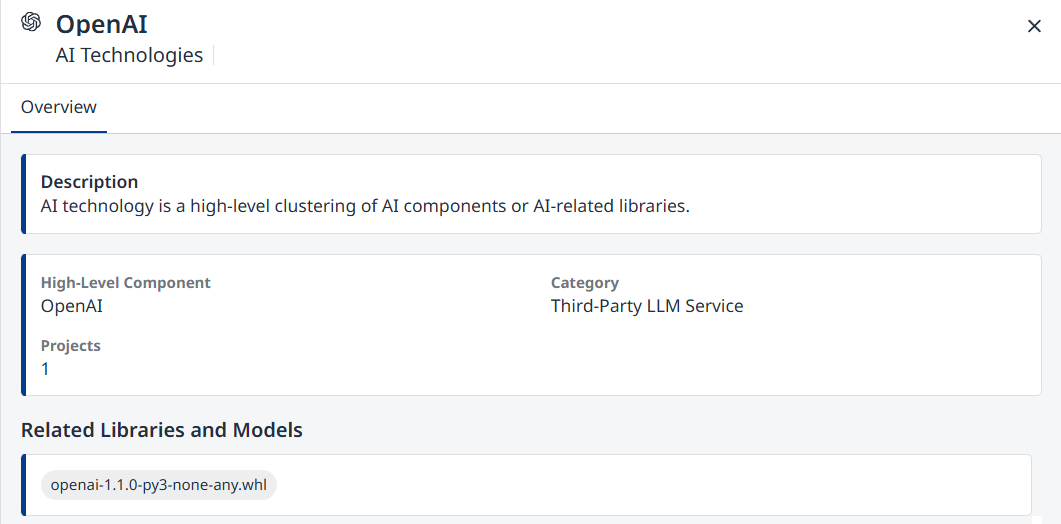

Side-panel

Clicking any line in the table will spawn a side-panel containing an overview of the selected AI technology.

Example: